"Knowledge + Data" Driven AIGC in Brain Image Computing For Alzheimer's Disease Analysis

Date:19-01-2024 | 【Print】 【close】

Generative AI finds a valuable application in the realm of brain network analysis, utilizing deep learning to generate a patient's brain network from multimodal images. However, existing data-driven models face challenges, relying heavily on extensive high-quality images, potentially resulting in suboptimal models and a failure to accurately assess evolving characteristics in brain networks. Moreover, these models lack interpretability, hindering the reasonable interpretation of abnormal connectivity patterns with biological significance and the uncovering of cognitive disease mechanisms.

Alzheimer’s disease, marked by structural and functional connectivity alterations in the brain during its degenerative progression, underscores the importance of multimodal image fusion in diagnosis and brain network analysis.

A team of researchers, led by Prof. WANG Shuqiang from the Shenzhen Institute of Advanced Technology (SIAT) of the Chinese Academy of Sciences, has introduced a Prior-Guided Adversarial Learning with Hypergraph (PALH) model for predicting abnormal connections in Alzheimer’s disease. This innovative model integrates anatomical knowledge and multimodal images, generating a unified connectivity network with high-quality and biological interpretability through the AIGC model.

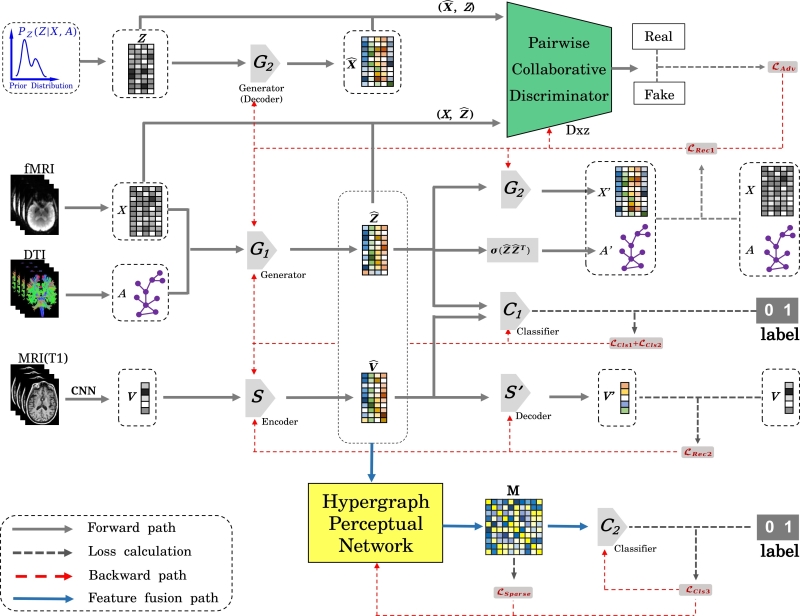

Published in IEEE Transactions on Cybernetics onJan 14, 2024, PALH comprises a prior-guided adversarial learning module and a hypergraph perceptual network. The prior-guided adversarial learning leverages anatomical knowledge to estimate the prior distribution and employs an adversarial strategy to learn latent representations from multimodal images. Simultaneously, the pairwise collaborative discriminator (PCD) enhances the model's robustness and generalization by associating the edge and joint distribution of the imaging and representation spaces.

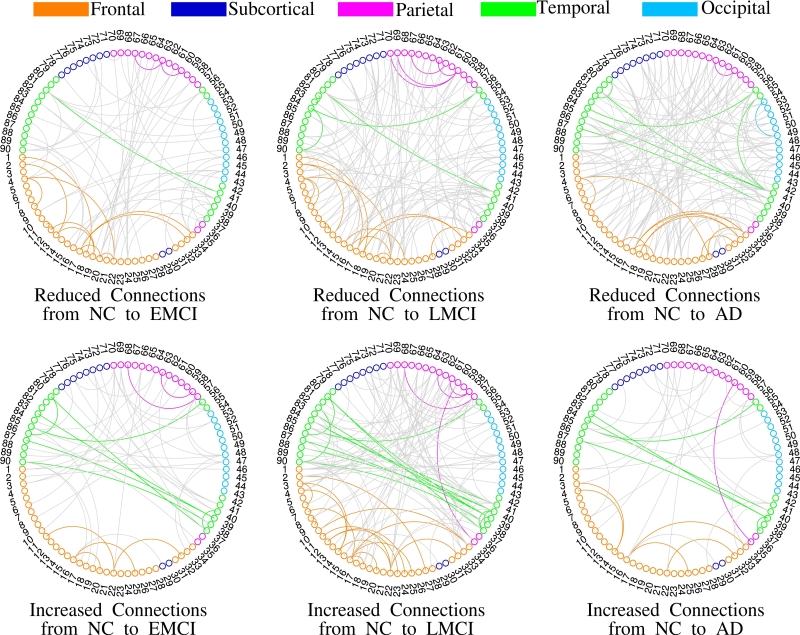

Furthermore, the hypergraph perceptual network (HPN) is introduced to establish high-order relations between and within multimodal images, enhancing the fusion effects of morphology-structure-function information. HPN proves instrumental in capturing abnormal connectivity patterns at different stages of Alzheimer's disease, improving prediction performance and aiding in the identification of potential biomarkers.

By modeling a complex multilevel mapping of structure-function-morphology information, PALH significantly enhances Alzheimer's disease diagnosis performance and identifies relevant connectivity patterns associated with the disease progression. Comprehensive results demonstrate the superiority of PALH over other related methods, with identified abnormal connections aligning with previous neuroscience discoveries.

Prof. WANG highlighted, "The proposed model is a unified prior-guided AIGC framework applied for the first time to evaluate changing characteristics of brain connectivity at different stages of Alzheimer’s disease." The first author, QIANKUN ZUO, noted, "Patients with Mild Cognitive Impairment (MCI) exhibit damaged connections and compensate for functional activities with strengthened connections. As the disease progresses, more severe connectivity damage occurs, leading to irreversible cognitive decline.

Fig. 1 Architecture of PALH. (Image by SIAT)

Fig. 2 Visualization of altered connections at different stages of Alzheimer’s disease. (Image by SIAT)

Media Contact:

ZHANG Xiaomin

Email:xm.zhang@siat.ac.cn